|

Return to Previous Chapter: Chapter 2 - An Explanation of the Proposed Heuristic

Continue to Next Chapter: Chapter 4 - A Refinement of the Search Process

Everything is vague to a degree you do not realize till you have tried

to make it precise. - Bertrand Russell

The goal is to transform data into information, and information into insight - Carly Fiorina, American

business executive, best known as former CEO (1999–2005) and Chairman of the Board (2000–2005) of Hewlett-Packard

Everything that can be counted doesn't necessarily count; everything

that counts can't necessarily be counted. - Albert Einstein

The indispensable first step to getting the things you want out

of life is this: decide what you want. - Ben Stein

It's not hard to make decisions when you know what your values are.- Roy Disney

Planning is bringing the future into the present so that you can do something about it now. - Alan Lakein

It is far better to foresee even without certainty than not

to foresee at all. - Henri Poincare

All human situations have their inconveniences. We feel those of the present

but neither see nor feel those of the future; and hence we often make troublesome changes without amendment, and frequently

for the worse. - Benjamin Franklin

Whether your objectives have been given to you by your boss or you're

creating objectives, make sure they are SMART—specific, measurable, achievable, realistic, and time bound.

Until you can measure something and express it in numbers, you have only

the beginning of understanding. - Lord Kelvin

If you want it, measure it. If you can't measure it, forget it. - Peter Drucker

3. A Specification for an Evaluation Function which Uses this Heuristic

"The main task of an intelligent system is to intelligently determine what options will be looked at...

the building blocks for this are grouping, focusing attention, and searching" - van Wezel, Jorna, Meystel, Planning in Intelligent

Systems, p.529

"the key to building practical intelligent systems lies in understanding

how to focus attention on what is important and ignore what is irrelevant. Attention is a mechanism for allocating sensors

and focusing computational resources on particular regions of time and space. Attention allows computational resources to

be dedicated to processing input that is most relevant to behavioral goals. Focusing of attention can be accomplished by directing

sensors toward regions in space classified as important and by masking, windowing, and filtering out input from sensors or

regions in space classified as unimportant." - James Albus, Engineering of Mind, p.80

"The brain uses vast amounts of memory to create a model of the world. Everything you know and have learned is stored

in this model. The brain uses this memory-based model to make continuous predictions of future events. It is the ability to

make predictions about the future that is the crux of intelligence." - Jeff Hawkins, On Intelligence, p.6

"Yet it is my contention that the two, abstract planning and concrete calculation, are really closely tied together;

that in one as in the other the most important thing is the ability to focus one's attention on the few relevant moves, and

dispose of the rest quickly and confidently." - Julio Kaplan, The ABC's of chess, Chess Life and Review, July 1978

What does the evaluation function look like for the proposed

heuristic? The following specification is as detailed as I can determine at this point in time:

Version 1.4, 30 August 2007

1. For each terminal position we evaluate in our search tree,

we generate unrestricted "future mobility" maps for each piece of the moves it can make 1, 2, and 3 moves into the future.

We consider moving pieces out of the way or capturing pieces in order to accurately calculate this "future mobility" (See

Appendix E: Example Number 1) where we work out the contents of the mobility tables for three

pieces in a sample middle-game position).

2. We award each piece a bonus based on how much territory it

covers on the board (and how much damage it can inflict or defensive support it can provide) in 3 moves. This could be in

lieu of any fixed number like 300 for Bishop, 500 for rook, etc. Our bonus for mobility in itself will be small -

it is the mobility that leads to positional pressure on another piece that we are primarily interested in.

3. We give a piece an offensive score based on the number

and type of enemy pieces we can attack in 3 moves. We give a piece a defensive score based on how many of our own

pieces it can move to defend in 3 moves. Again, this bonus is reduced for each move it takes to potentially defend such piece.

Again this information is derived from the "future mobility" move maps we just calculated. Extra points can be given

for weak or undefended pieces that we can threaten.

4. The proposed heuristic determines also king safety from these

“future mobility” move maps. We penalize our king if our opponent can move pieces into the 9-square template around

our king within a 3 move window. The penalty is larger if the piece can make it there in 1 or 2 moves, or if the piece

is a queen or rook. We penalize our king if multiple enemy pieces can attack the same square near our king. Our king is free

to move to the center of the board - as long as the enemy cannot mount an attack. The incentive to castle our king will not

be a fixed value, such as a quarter pawn for castling, but rather the reduction obtained in the enemy's ability to move pieces

near our king (the rook involved in the castling maneuver will likely see increased mobility after castling is performed)

The king will come out of hiding "naturally" when the number of pieces on the board is reduced and the enemy does not

have the potential to move these reduced number of pieces near our king. We are likewise free to advance the pawns protecting

our king, again as long as the enemy cannot mount an attack on the monarch. The potential ability of our opponent to mount

an attack on our king is the heuristic we use as the basis for king safety. Optionally, we will consider realistic restrictions

that our own pieces can make to our opponent's ability to move pieces near our king, as discussed in point 6 below. For "King

mobility" in the middle game, we can reward our King for a potential to move to a safer square. For example, if our King has

the potential to castle in the future, we would give it a bonus based on its ability to move to a safer square, and

how safe that square actually is.

5. Pawns are rewarded based on their chance to reach the last rank,

and what they can do (pieces attacked and defended in 3 moves, whether or not they are blocked or movable). The piece mobility

tables we generate should help us identify pawns that cannot be defended by other pawns, or other pieces - it is this weakness

that we should penalize. Doubled or isolated pawns that cannot be potentially attacked or blockaded by our opponent should

not be penalized. Pawns can be awarded a bonus based on the future mobility and offensive/ defensive potential of

a queen that would result if it made it to the back rank, and of course this bonus is reduced by each move it would take the

pawn to get there.

6. The diagonal squares in front of pawns are unlikely locations for

enemy pieces (because the pawn could capture them), and so we should reduce the mobility bonus for enemy pieces that trace

their mobility through stopping points on these particular squares. [In fact, a more ambitious approach will reduce

the mobility bonus for all pieces when they trace mobility through squares attacked by lesser-value pieces.

This concept will result in a more positional style of play.]

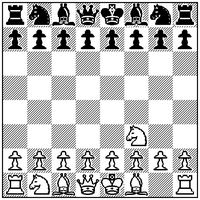

Consider now the starting position where our king-side knight has moved to square f3. Let's look at

the piece mobility tables - an 'x' represents a potential move.

|

| Figure 3.1 |

1-move map

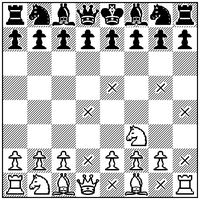

|

| Figure 3.2 |

2-move map

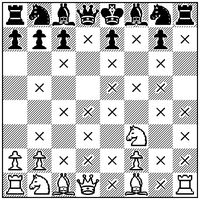

|

| Figure 3.3 |

3-move map

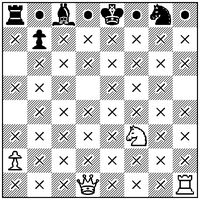

|

| Figure 3.4 |

As mentioned previously, before we trace mobility through friendly

pieces we must first check and then spend 1 move moving the friendly piece out of the way.

The squares in figure 3.4 above marked with a circle represent potential moves where our knight has

to first stop on a square attacked by an enemy pawn. The enemy pawn represents a limiting factor

to our piece as it attempts to accomplish objectives. From experience, it is unlikely that the knight can move directly

to this square without being captured, at this present point in time. But our knight might be able to move

to the square in question if our opponent is under pressure and has to move a piece or pieces out of the way. We

would therefore give our knight a reduced bonus for future mobility to these kinds of squares even though it is unlikely at

present that the piece can move directly to this square (due to limiting factors). The positional pressure that our knight

can exert on these kinds of squares (and any pieces located on them) is real but small in quantity, and should

be accounted for in our evaluation function.

However we decide to model (and therefore estimate) the positional pressure of our pieces, we

follow a two-step (or possibly a three-step) process:

1. we calculate the unrestricted future mobility of each chess piece 3 moves into

the future (by updating each piece mobility database from the previous position), then

2. we estimate the realistic operating range/ level of engagement by determining

the limiting factors that bound the unrestricted mobility [see Systems Engineering and Analysis, 4th Edition, by Blanchard and Fabrycky p.162]. We reduce the bonus for accomplishing objectives if the required moves can only be traced through squares

that are likely to result in the piece being captured before it can accomplish its objective (such as, attacking an enemy

piece or defending a friendly piece).

[3. additionally, we may wish to target the limiting factors themselves (the pieces which

restrict the range of our pieces) either directly or indirectly, for example by overloading them.]

At a minimum, we reduce the bonus given to pieces for future mobility traced through

squares attacked by enemy pawns, and through squares attacked by lower-valued enemy pieces. We may use another

scheme for determining mobility reduction for piece movement through squares attacked both by friendly and enemy pieces (see

Appendix G: Tracing Mobility through Squares Attacked by both Friendly and Enemy Pieces for a discussion of this subject).

We might be bold enough to use Botvinnik's notation and label the

black pieces under the circles in Figure 3.4 as "type IV" pieces - the white knight can theoretically trace mobility to these

squares, but limiting factors prevent the knight from moving to the square in a way that the piece would not

be captured. The 7 black pawns under direct attack from the knight in Figure 3.3 (marked with an 'x') we might classify as

"type III" pieces since the white knight can realistically trace mobility to these squares (no limiting factors present).

If there were any black pieces directly capturable by the white knight, we would call these "type II" pieces (they

would be on the squares labeled with an "x" in figure 3.2). Pieces already captured and removed from the board are classified

as type I pieces. Pieces therefore are placed into category IV as they fall into distant range of the enemy pieces,

become category III as the enemy can directly target them without opposition, become category II as they now become directly

capturable, and end life as category I when they sit in the box after they are captured. The positional evaluation function

classifies pieces in these 4 categories and monitors the pieces as they move back and forth from these categories as

the game progresses.

Notice that we did NOT give any piece a bonus for *sitting on a square*

like a traditional chess program. We reward each piece for its predicted ability to accomplish strategic objectives,

exert positional pressure, and restrict the mobility of enemy pieces, based on the current set of pieces on the chess

board at the time we are calling our evaluation function. This means that many of the pieces will have moved from their

current positions and have a different set pieces in their line of sight.

Here is the bonus that the traditional chess program "crafty" (by

respected chess program author and academic Dr. Robert (Bob) Hyatt) gives rooks and queens for "sitting on squares".

Imagine a chessboard superimposed over these tables. Note that this bonus is awarded regardless of the location of other pieces

on the board. There is nothing wrong with this approach - many successful chess programs (including the one by Dr. Hyatt)

have been built using this method - it is just a different way to estimate the positional pressure produced by the chess

pieces:

int rval_w[64] =

-3, -3, 1, 3, 3, 1, -3, -3,

-3, -3, 1, 3,

3, 1, -3, -3,

-3, -3, 1, 3, 3, 1, -3, -3,

-3, -3, 1, 3, 3, 1, -3, -3,

-3, -3, 1, 3, 3, 1, -3, -3,

-3, -3,

1, 3, 3, 1, -3, -3,

-3, -3, 1, 3, 3, 1, -3, -3,

-3, -3, 1, 3, 3, 1, -3, -3

;

int qval_w[64] =

-10, -8, 0, 0, 0,

0, -8,-10,

-10, 2, 8, 8, 8, 8, 2,-10,

-10,-10, 4, 10, 10, 4,-10,-10,

-10, 2, 10, 12, 12, 10, 2,-10,

0, 2, 10, 12, 12, 10, 2, 0,

0, 2,

4, 10, 10, 4, 2, 0,

-15, 0, 4, 5, 5, 4, 0,-15,

0,

0, 0, 0, 0, 0, 0, 0

;

Continued Explanation of the Proposed Heuristic

If we do things right, the information present in the 1st, 2nd and

3rd order mobility maps that we have generated allow us to estimate the positional pressure produced by

the chess pieces. This pressure is measured by taking into account the presence and abilities of the other pieces in the current

position on the board. We create an agile model of the future by calculating "realistic mobility" of the pieces (unrestricted

mobility modified by restrictions placed by enemy pieces) and then measure the ability of the pieces to realistically pressure

the opponent or support friendly pieces. In this way we bring the future into the present (a definition of planning) so we

can 1. fill our search tree with positions that matter and 2. reward pieces for the positional pressure they create at our

endpoint evaluation.

From these calculations we can make a reasonably accurate

estimate of the "winnability" of a position, or determine if compensation exists from sacrificing a piece. This evaluation

'score' also helps "steer" the search process, as the positional score is also a measure of how "interesting" the position

is and helps us determine the positions we would like to search first. I would point the interested reader to the description

of the evaluation function for the 1970's era Northwestern University chess program Chess 4.5, found on page 93-100 in Peter

W. Frey's Chess Skill in Man and Machine, for comparison.

The technical difficulty involved in the proposed heuristic is in

the “counting” exercise that goes into keeping the "future mobility" move maps up to date. To save time,

we can update our mobility database from the previous position as we move a piece. Most of the 'unrestricted mobility'

information for each piece will remain unchanged, or can be moved around with a programming structure called a "pointer" as

we take into account the new potential moves that result when a piece moves on the chessboard. This process, outlined in

example 1 in the Appendix, is not simple to implement. The proposed evaluation function will spend much of its time updating

the piece mobility database, and then counting the 'cues' present in the position that determine how desirable or

interesting the position is (and worth further searching). The evaluation function will return a score that represents

the estimated winnability of the position, based on the material, positional pressure, king safety, mobility, offensive and

defensive potential and other factors that contribute positively to measured performance.

Testing and Calibration

We can measure this performance by setting up automated tournaments

of games with other chess programs, such as a tournament of a few hundred games with a time control of 1 minute per game

plus 1 second per move. This fast and convenient method is a suggested starting point for test and evaluation. Extensive testing

by the CEGT group (Chess Engines Grand Tournament) at much longer time controls (see CEGT web page http://www.husvankempen.de/nunn/) indicates that tournaments using longer time controls do not usually produce performance numbers that differ significantly.

Another Method for Testing

Alternatively, we can use another method. If we base our evaluation

function on a winning percentage rather than the traditional "centipawns" used by most programs, we can calibrate

our evaluation function against known positions from opening theory. If the evaluation must be performed in centipawns, we

can convert to winning percentage using this table (posted on rybkaforum.net by V. Rajlich, modified to represent

pawns as 1.00):

% to win advantage in pawns

----------------------------------

50% -> .00

55% -> .22

60% -> .43

65% -> .64

70% -> .86

75% -> 1.07

80%

-> 1.29

85% -> 1.51

90% -> 1.89

95% -> 2.41

Table 3.1

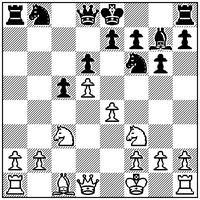

For example, let's look at the following position from opening theory, reached after the move

sequence 1. d4 Nf6 2. c4 c5 3. d5 b5 4. cxb5 a6 5. bxa6

g6 6. Nc3 Bxa6 7. Nf3 d6 8. e4 Bxf1 9. Kxf1 Bg7

| Position A |

|

| White to play, after 9...Bg7 |

If we look at a database of current master and grandmaster games that

have reached this position such as the Rybka II opening book, (opening explorer from chessgames.com or similar device

from chesslab.com can also be used) we can see that white has won 47.3 percent of the time, drawn 27.3 percent of

the time and lost 25.4 percent of the time. This equals a winning percentage of 60.9 percent. Our "evaluation" of

this position should be 60.9 percent, or close to it. Using Table 3.1 above and interpolating, this winning percentage

corresponds to a centipawn score of 0.468. [Chesslab reports these numbers as 39 percent white win 34 percent drawn and 27

black win for a winning percent of 56%. Keep in mind that new games are always being added to both databases so these numbers

might change.] If we select 200 positions from opening theory that are generally empty of tactical possibilities,

we can store the expected winning percentage from current practice, and determine our program's estimated winning percentage

(the output of its evaluation function). By the way, the Rybka 2.3.2a evaluation of the position in the above diagram (Position

A) is +0.49 after 26-ply analysis with the continuation 10.g3 0-0 11.Kg2 Nbd7 12.a4 Qa5 13.Bd2 Qa6 14.Re1 c4.

Using this procedure, we can calibrate our program's evaluation function with results

from current master-level practice. For each position from opening theory we evaluate, we subtract our program's evaluation

from the actual winning chances from master level play and generate an error function. From this information we can calculate

a mean and a standard deviation for this error function. We can look at positions that for some reason do not score well (perhaps

there are deeply hidden tactical opportunities) and eliminate these positions from consideration. We can then make changes

to our evaluation function and immediately judge the accuracy of those changes. We can even determine the weighting of the

various parameters in our evaluation function by randomly bumping up or down the various parameters and seeing if we have

a more accurate estimate of the winning chances of the various test positions we have obtained from opening theory.

When we are confident that our evaluation function correlates with the winning chances of master

level games from opening theory, we can construct automated tournaments against chess programs of known playing strength to

evaluate how well our program does in simulated game conditions, especially where the slowness of our evaluation function

will hurt the tactical ability our our program's performance. Knowledge can be added to our evaluation function, but only

when the tournament performance of our program under game conditions is improved.

Continuing the explanation

If a chess expert decides to sacrifice a pawn in a chess game,

he or she hopes that the present and future positional pressure of the sacrificed pawn is exceeded by the increase

in positional pressure of his or her remaining pieces. It is the positional pressure of

our pieces, and the potential ability to attack or defend strategic targets, that compensates for the loss of “material”.

We are using a heuristic to estimate this positional pressure, much the same way a human chess player does.

Now that we have precisely defined (by means of our proposed rule-of-thumb

called a heuristic) what kind of positions we are looking for, the search portion of our chess program can try out different

moves. The engine "knows" how to evaluate the positions, and will focus along lines that are interesting, and perhaps might

even play a game of chess that looks reasonably human.

The proposed heuristic (ideally) obtains a more realistic evaluation

of the winning chances (of a given position) by extracting information from the distance and future mobility of the pieces

on the board instead of looking up numbers in tables. The machine is spending time searching for ways to increase the real

and measured positional pressure on the opponent, not for ways to move its pieces to the center of the board. There is

a difference - perhaps insignificant when playing a beginner, but significant enough when playing someone capable of

playing a strong positional game of chess. Note that the bonus we give our knight to move to square f3 (from the starting

position at g1) is based on the positional pressure it is capable of creating in the present position - offensive and defensive.

Note also that there are many ways to reward pieces based on the distant

pressure they are capable of creating - one would have to build a chess program and run tests to determine which method has

the strongest performance.

In summary, we have created a model of positional pressure in our evaluation function. The

proposed method is certainly not the only way to do this. There is no doubt in my mind that someone will

find a better way to model this. The question we should ask is: do we find the model useful for focusing the

attention of our search efforts? If not, how might we change the model? We might begin to think of an upper and lower

bound for positional pressure. An upper bound might consider what the pieces can accomplish using unrestricted

mobility 3 moves into the future. A lower bound might consider what the pieces can accomplish considering only mobility through

squares not attacked by an enemy piece. We also might consider a middle ground, somewhere in between these

two extremes, where we consider placing realistic restrictions on the unrestricted piece mobility before awarding points for

things the pieces can (potentially) accomplish. There exist objectives and limiting factors, as described

by Systems Engineering and Analysis, 4th Edition, by Blanchard and Fabrycky, 1999, p.162-163. Additionally, the decision protocol of Orasanu and Connolly (1993) includes the identification

of the constraints, resources, and goals facing the decision maker.

Michalewicz and Fogel in How to Solve It: Modern Heuristics have this to say about using

models to solve problems:

p.31"Whenever you solve a real-world problem, you have to create a model of that problem first. It is critical

to make the distinction that the model that you work with isn't the same as the problem. Every model leaves something out.

It has to - otherwise it would be as complicated and unwieldy as the real-world itself. We always work with simplifications

of how things really are. We have to accept that. Every solution we create is, to be precise, a solution only to the model

that we postulate as being a useful representation of some real-world setting that we want to capture. The trouble with models

is that every one of them has an associated set of assumptions. The most common mistake when dealing with a model is to forget

the assumptions."

Starfield, Smith and Bleloch in How to Model It emphasize that problem solving and thinking

revolve around the model we have created of the process under study:

p.21"The point we want to make is that thinking consciously and explicitly about models

is a crucial part of problem solving in general."

The model we have created for positional pressure in our evaluation function allows our machine to

intelligently focus the search efforts on likely moves, and by proper ordering of our moves, to produce faster

cut-offs in our alpha-beta pruning. We desire a proper balance between an anticipatory and a reactive planning strategy.

From van Wezel, Planning in Intelligent Systems:

p.65"At the symbolic level, anticipation is elaborating a representation of the future (forcasting)... expert

process operators have more planning ability than beginners because they anticipate more"

p.96"Anticipation is costly and limited by time constraints. It can be a handicap when it goes beyond actual

competency, so that reactive strategies are necessary. The focus on planning strategies should not let us forget the adaptive

power of reactive strategies, especially when time constraints are high or process knowledge is weak. The main problem

to solve is finding an efficient compromise between anticipative and reactive strategies to reach a satisfying performance

level."

We might actually use two (or more) evaluation functions. Function 1 might be a general

purpose algorithm that will be faster in operation, but might not be completely accurate. If our score for the position in

question (using this faster method) is somewhat close to being the best move, we might wish to re-score our position with

a more accurate evaluation function, or function 2. Perhaps we use the unrestricted mobility of the chess pieces

as our function 1, and we modify this algorithm as proposed in point 6. above for function 2. We might use yet other evaluation

functions to resolve situations where safe piece mobility through certain squares cannot be accurately determined (see Appendix

G), or use search extensions to handle these cases.

Now that we have told our machine how to score each position, we need to search for the moves that

lead to the best playable continuation.

Continue to next section

Chapter 4 - A Refinement of the Search Process

|